As this report about automation in quality assurance states, ensuring optimal test coverage is among the most arduous tasks in QA. This is because the calculation of this metric depends on a plethora of variables, e.g. the geography of end-users, device usage patterns, the level of risk tolerance agreed between the developer and stakeholders, etc. At the same time, advancing in handling this metric is among the top agenda items for teams as it has a direct effect on quality. Introducing automation has markedly alleviated the challenge. In this piece, we’ll discuss the essence of automated test coverage, its perks, and its areas of appliance across sundry stages of the testing lifecycle.

The importance of test coverage in quality engineering

Understanding the relevance of a metric can be daunting without perceiving its basics first.

Test coverage indicates the share of a solution that is backed by test cases. It encompasses code coverage, branch coverage, function coverage, and other types of measurements.

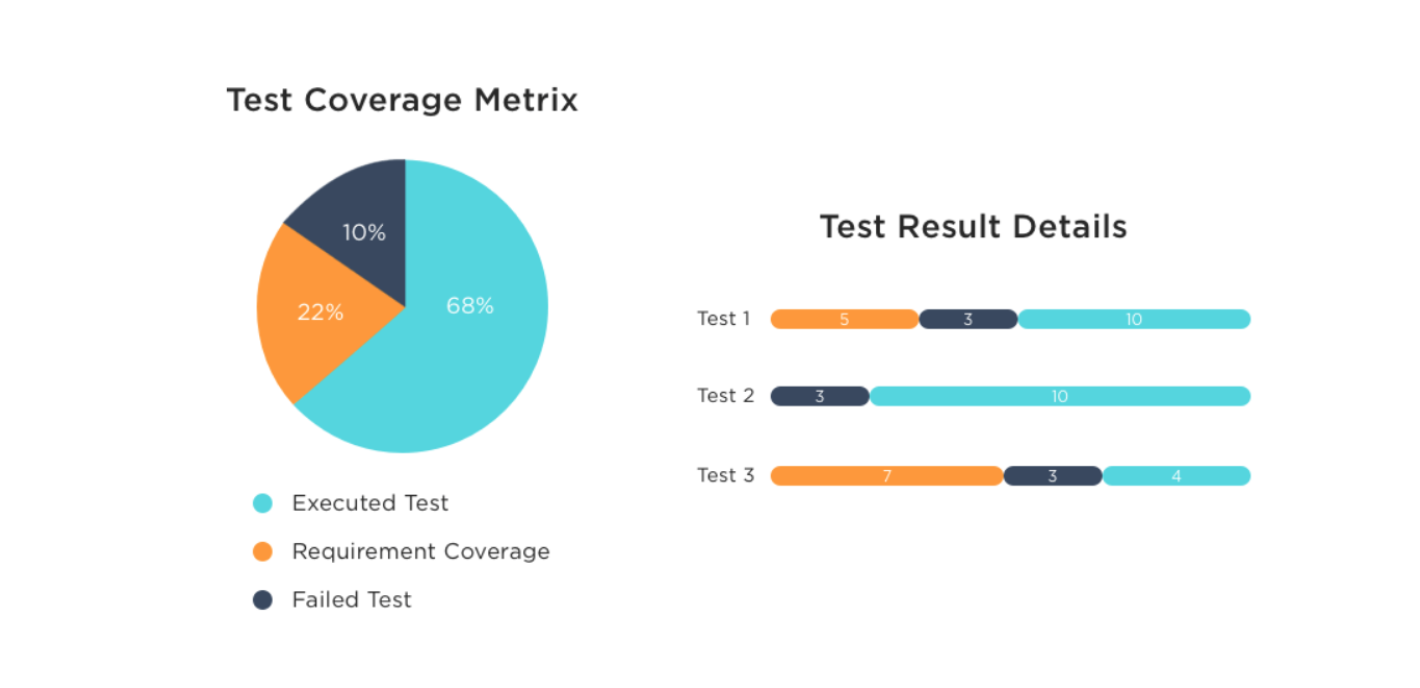

The percentage that is optimal for a particular IT initiative hinges on its industry, scale, and requirements. Since it’s unattainable to ensure fully exhaustive test coverage, It’s believed that the minimum threshold amounts to 60%, while the optimal one ranges between 70 and 80%, based on feature criticality and prioritization. The rate is gauged by means of dedicated instruments, e.g. TestRail, ReQtest, Zephyr, and others, by assessing the proportion of executed code statements out of their total number.

What makes this indicator so critical? Well, the optimal rate guarantees that the core and operation-critical functionality is fully backed by the necessary verifications so that the resulting program behaves as outlined in the specifications under all possible conditions. Accordingly, the customer will obtain the very outcomes they are aspiring for to completely satisfy their business objectives.

What makes this indicator so critical? Well, the optimal rate guarantees that the core and operation-critical functionality is fully backed by the necessary verifications so that the resulting program behaves as outlined in the specifications under all possible conditions. Accordingly, the customer will obtain the very outcomes they are aspiring for to completely satisfy their business objectives.

Moreover, by sorting the tasks by priority and omitting excess scenarios, specialists economize on time and resources, and subsequently, trim costs. This is critically significant amidst today’s market rivalry where rapidity and productiveness are the game-changers.

At the same time, one should be aware that maintaining an amplified coverage is not an end in itself. The goal is to draw up quality tests and couple them with other practices like pair programming, sound DevOps routines, etc.

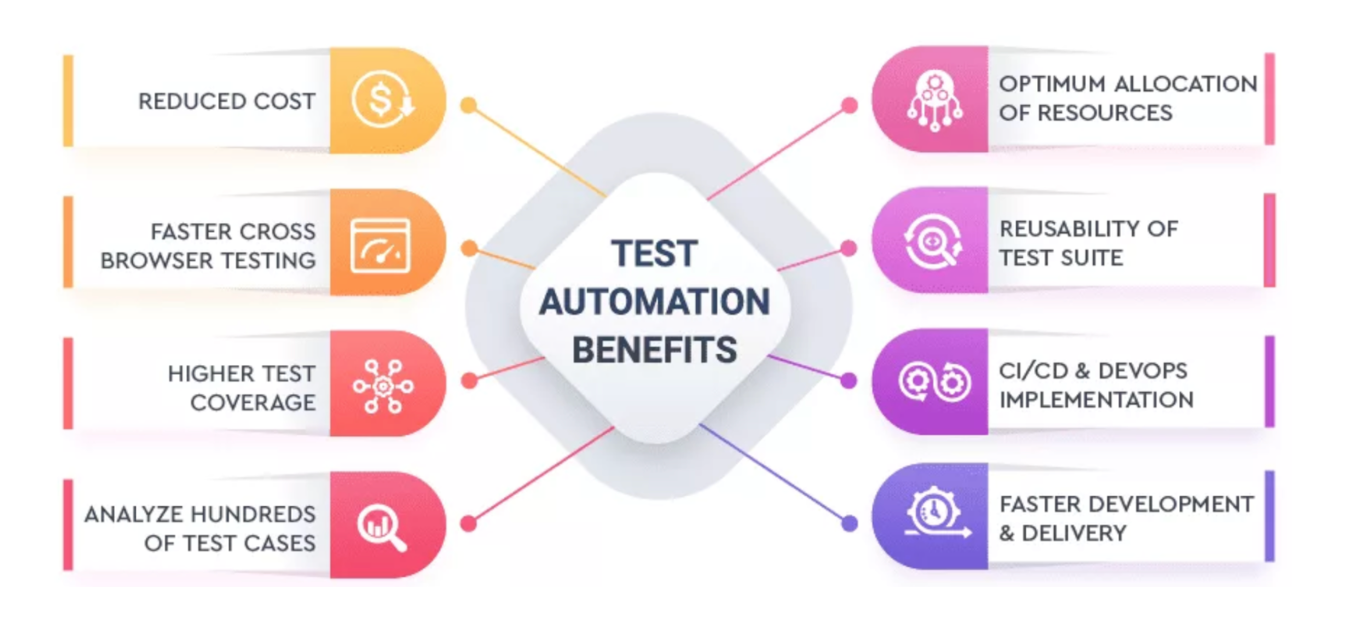

Three ways automated test coverage maximizes your QA efforts

Automation test coverage is an efficacious technique that allows specialists to rapidly conduct a series of checks on a vast scale by means of dedicated programs and scripts. These verifications, if not performed by bots, are often excessively laborious and data-intensive. By turning to automation, teams cover an increased number of features. Moreover, verifications are carried out simultaneously for sundry OSs and devices and with the involvement of various combinations of settings to encompass wide-ranging scenarios.

Additionally, automated test coverage enables specialists to emphasize rather intricate and exploratory tasks while occupying scripts with a monotonous routine. This provides them with the opportunity to pay closer attention to complicated and borderline cases and upgrade quality.

Below, there is an explanation of how to implement automation test coverage at diverse stages of a QA cycle.

Below, there is an explanation of how to implement automation test coverage at diverse stages of a QA cycle.

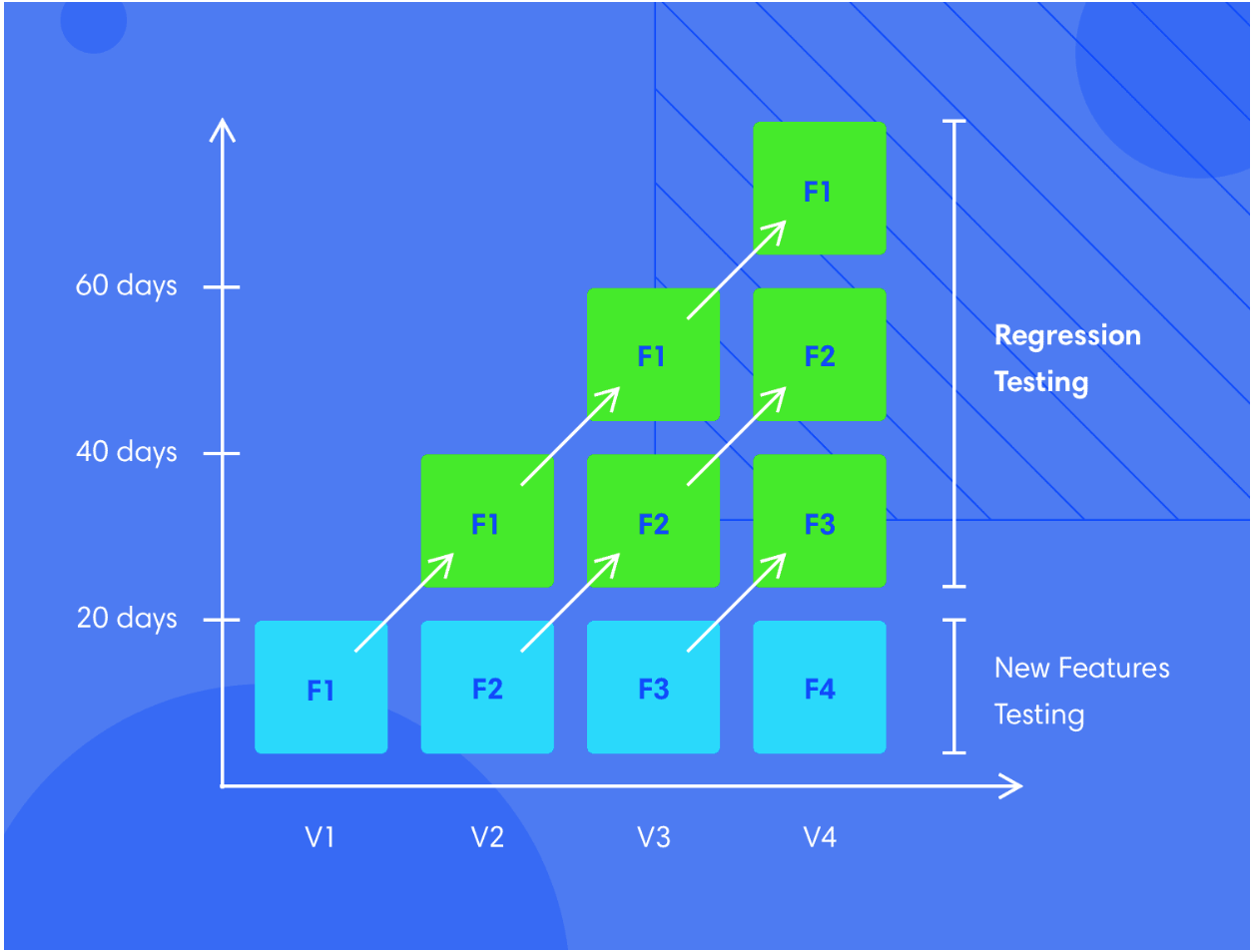

Regression automation coverage

This verification method is intended for guaranteeing that when a change to a code takes place, it doesn’t entail a failure in behavior, performance, etc. of previously built features. Furthermore, it is not uncommon that addressing one issue inadvertently causes another one. Therefore, it’s advisable to rerun this category of verifications at short intervals as the solution in progress is ongoingly incremented.

Some teams might be tempted to organize their processes around functional testing. Although it seems reasonable to go through a newly added feature only once and move forward along the cycle, the reality is that one never knows what kind of alterations in behavior a recent feature is going to entail.

When making estimates for regression automation coverage, one should make certain that there will be a sustainable return on investment. For this purpose, it’s essential to evaluate the price of establishing the necessary environments and infrastructure and employing AQAs’ services. Implementing automation test coverage will definitely pay off if you are nurturing an idea of the long-term product life cycle and its ongoing maintenance. On top of that, this strategy is fundamental when you work with legacy code for revamping and augmenting the existing software.

The optimal instruments to accomplish this end are those that are compatible with your CI/CD pipeline and are equally productive at all stages of the SDLC. To name a few, these are Selenium, Telerik Test Studio, Appium, Ranorex, TestComplete, etc., which can be applied to a program in any modern programming language and on your platforms and devices of choice.

The following hints will outline the main idea for you on how to fine-tune the workflow:

- Pinpoint cases that address your prioritized requirements,

- Write scripts and use appropriate instruments to optimize them through automation,

- Implant the resulting checks into the suite,

- Leverage a robust CI mechanism to trigger verifications whenever enhancements are introduced to the software,

- Systematically review the analytics from the flow to adjust it as and when required.

Reporting and analytics

As we’ve mentioned before, engineering as many verification scripts as the documentation demands is not an end in itself. To succeed, one needs to closely scrutinize the impact that the augmented number of cases has on comprehensive product performance and excellence. To achieve this, you need solid analytics in place.

Systematizing behaviors and yields from your efforts will provide you with round-the-clock awareness of the progress of your project, its impediments, and blockages, and will help you close the gaps in meeting the automation coverage metrics.

Furthermore, curtailing manual efforts guarantees enhanced data preciseness. Thus, you’ll be able to effortlessly tailor-fit these measurements to the requirements.

How often should these deliverables be submitted? This hinges on the agreement between stakeholders and a contractor, aka the provider of services. However, it’s advisable that every variation to the repository, e.g. error rectification, functionality extension, and other alterations that involve risks should be followed by a report.

In the part of reporting, software testing automation services encompass the following sequence of actions:

- Consider the indicators that you’ll monitor, e.g. performance, code testing scope, etc.,

- Draw up a template of the report; this should comprise the degree to which the code is covered with cases, their identification and environmental details, the particularities and characteristics of scripts, validation duration and results, and other information,

- Adopt instruments like TestRail, Zephyr, qTest, HP ALM, TestComplete, etc., for test execution management and concurrent summary compilation and immediately communicated insights,

- Examine and assess the findings to recognize gaps and insufficiently covered modules and complement the suite with further cases if essential, e.g. to address corner cases,

- Lastly, the automation coverage metrics are altered to realign with ever-dynamic priorities.

Risk-based services with the appliance of automation

This approach lies in streamlining and ordering tasks pursuant to project uncertainties and vulnerabilities, as well as fortuitous effects. These can have a bearing on the overall project course, outlay, timelines, and other critical characteristics. However, having well-thought-out risk-related procedures in place guarantees that even if an inconsistency emerges it won’t exert a pivotal impact on the app’s operation.

At the core of a risk-centered model, there is making such events a yardstick and the reference point in every facet of the pipeline, i.e. strategizing, prototyping, deploying, and documenting. When planning for test automation coverage metrics, count with the following measures:

- Pinpoint the unforeseen effects appertaining to usability, security, performance, and other properties,

- Gauge the risks in line with software intricacy, glitch areas, their strategic importance, and other variables. Put on the front burner the potential hurdles that implore consequences critical for the operation and will occur with the utmost probability,

- Work the potential challenges into the overarching plan to provide for exhaustive checking of paramount cases,

- Ultimately, automate the risk-driven checks.

Key takeaways

Key takeaways

Eliminating gaps in the flow is feasible by embracing optimal approaches, e.g. harmonizing automation and risk assessment, giving due consideration to regression, and systematizing the reporting flow. These and other recommended procedures will allow you to reach greater testing breadth expeditiously and with refined exactitude.

To avail yourself of all the perks, strengths, profit, and capabilities, you can turn to adept software companies such as Andersen who provide expert QA services. Their seasoned teams not only know how to make the most out of dedicated programs such as Selenium, JMeter, TestComplete, etc., but they also boast the mastery of tried-and-true techniques and full compliance with established standards. This is confirmed by their proven track record and the obtaining of industry-significant certificates. An accomplished outsourcing firm will establish a robust flow for you through and through for unsurpassed excellence of your digital products.